Imagine you’re a company that has a 2 billion row fact table joined to a 100k row entitlements table built for row-level security. The fact table updates monthly, but entitlements change every time someone gets promoted, quits, changes title, etc. You can’t just refresh the whole data model monthly, because you’d end up with the wrong security rules applied. You also can’t refresh the entire data model multiple times a day, because refreshing that fact table would put a huge toll on your back-end systems as well as your Tableau Server. Within the Tableau UI, however, there’s no option to independently refresh the different parts of a datasource – if you schedule a refresh for your entitlements table, it’ll refresh your entire datasource.

Sometimes when you open Tableau, you’ll find that out-of-the-box functionality takes care of…well pretty much all of your needs. There are a handful of places, however, when you’ll really need to tap into the APIs. One such use case is for row-level security scenarios where you a) can’t use Virtual Connections and b) need to refresh your entitlements table more frequently than your fact table.

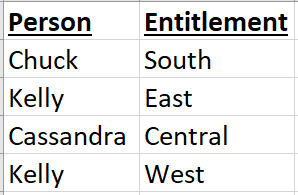

Quick vocab break: an Entitlements Table is a table which tells us who can see which data. This table should be updated as people gain/lose access to other data.

So how do we solve this? Well option 1, as mentioned above, is Virtual Connections. This allows independent scheduling for each table in the VConn, giving you the mixed freshness you desire. But some folks can’t use VConns – maybe the fact table refresh would time out, maybe you’re using an unsupported connector, maybe you have weird auth issues. How can we approach the problem in this case?

Luckily, there’s a combination of the Hyper API and the REST API in Tableau that can address this very efficiently.

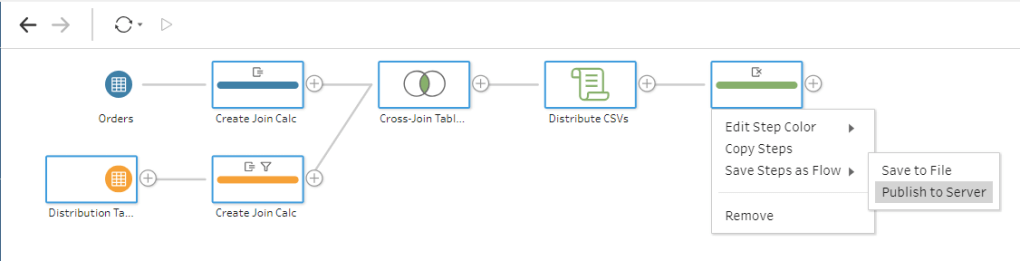

- Create an extract of your fact and entitlements tables using the Hyper API.

- Open these in Desktop, create a data model, and publish.

- Schedule no refreshes in Tableau at all!

- When entitlements change, re-create your Entitlements table using the Hyper API.

- Use Tableau’s new(ish) APIs to update just the entitlements portion of your datasource.

Now depending on the type of person you are, that either sounds really easy or really hard, so I’m going to break it down bullet by bullet. There’s a github repository with some shoddy Python code available here.

1. Create Extracts Using Hyper API

This is the heaviest lift part of it all. The first question you’re asking is “why don’t I just use Desktop to create these?”, and it’s a valid question. For the initial publish, it’s certainly possible, but to enable a live-to-Hyper connection (which we’ll need later), we need to make sure we’re not setting this up through the native UI. The Hyper API is designed to build extract files outside of Tableau, which is exactly what we need. I’ve built a sample script here which shows how you could do it from a SQL Server deployment.

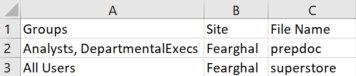

The file this creates is a single Hyper file which contains two tables: “Fact” and “Entitlement”. Because a Hyper file is data (as opposed to metadata), this doesn’t yet enforce any data security or have any relationship between the two tables.

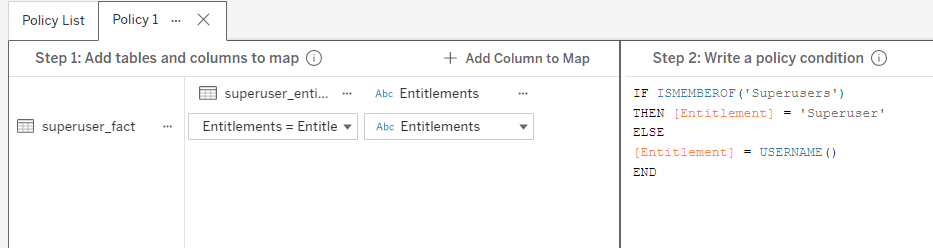

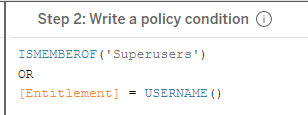

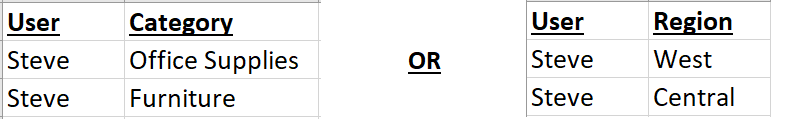

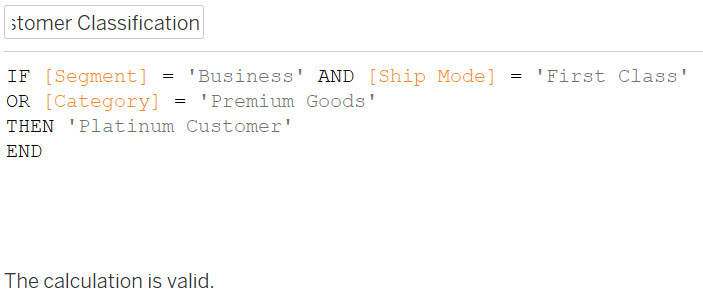

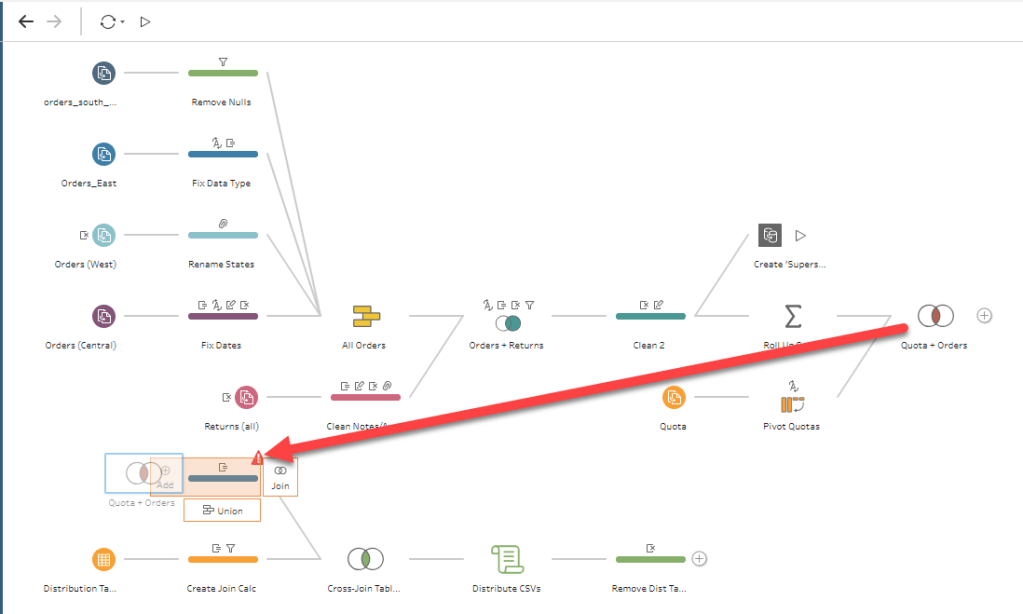

2. Create a Data Model

Double-click the Hyper file you created in Step 1 to open it in Desktop. From here, you can build your data model (join the tables but also write whatever calculations you need etc). You’ll also want to take this opportunity to build in your row-level security. Now that you’ve got data which is joined, enhanced, and secured, you can publish it to your Tableau deployment. This is no different than a normal publish process, except that the datasource (having been built/published differently) is eligible for live-to-Hyper updates.

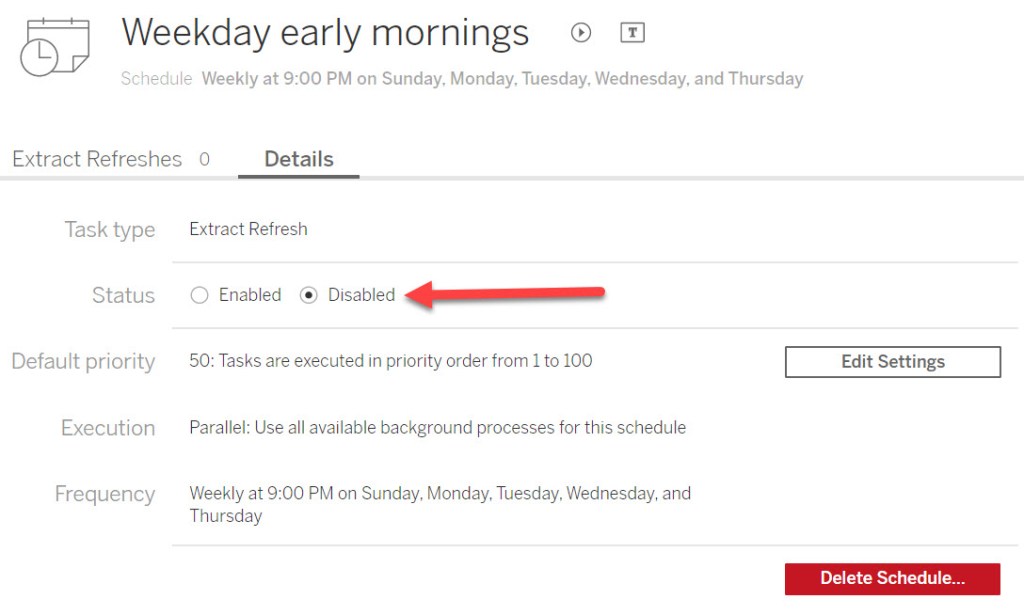

3. Schedule No Refreshes!

You’ve got it. Just resist the temptation to schedule anything in Server or Cloud. Because of the way this was built, you shouldn’t be able to anyway.

4. Re-build Entitlements as Needed

So far, we’ve just found a difficult path to creating a normal datasource, so there better be a payoff. This is where it happens. Now imagine someone leaves your company or changes titles – they need data access revoked immediately. Historically, we’d force you to refresh your entire data model. This could take hours, be quite costly, and may not even finish before you want to update it again.

With these APIs, however, we can just re-build the Entitlements table. We can re-use the script from step 1 here, but cut out all of the lines about the Fact table. We’re rebuilding just the smaller table, which will likely take mere seconds.

5. Replace the Server-Side Entitlements

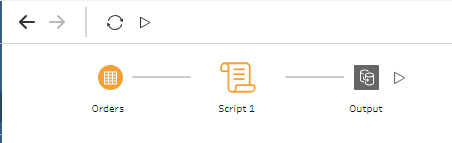

All we’ve done now is create a smaller local Hyper file. It contains none of my actual data, just my entitlements. If we were to publish this from Desktop, it would create its own datasource. Instead, we can push this table to overwrite just a portion of our existing datasource. The code provided here shows you how to

- Execute step 4 (above)

- Identify your target datasource

- Initiate a file upload (large files must be chunked, small files can be uploaded all as one)

- Commit the file upload to the appropriate data source and table name.

This is the final portion of the code. As long as your datasource ID, schema name, and table name line up, you can easily replace the entitlements table without touching the fact table!

6. Confirm It Worked!

Now go re-load any dashboard downstream of that data. The data won’t have changed, but the entitlements will have. Anyone promoted will have their enhanced access, anyone who quit will lose all access. This means you can easily kick off a flow many times a day, as people gain and lose access, without any worry about bogging down your system!

What Else?

This example is intentionally narrow, and built to solve a specific problem. There’s tons more you can do with these APIs, though! If you have a massive fact table and want to add just a small number of rows to it, this API allows you to append. If you want to maintain a file which has a rolling 12-month snapshot, you can write a simple script to delete-and-add rows. All up, this allows you far more flexibility than Tableau’s traditional refresh. Choose what data you care about, down to the row level, and manipulate it as you see fit!